Logs from Kubernetes Cluster

Send logs from Kubernetes Cluster using OpenTelemetry

Introduction

This guide will help you instrument your Kubernetes Cluster with OpenTelemetry and smoothly send the logs to a Last9 from all containers in the cluster.

Pre-requisites

- You have a Kubernetes Cluster and workload running in it.

- You have signed up for Last9, created a cluster, and obtained the following OTLP credentials from the Integrations page:

endpointauth_header

- Install helm.

- Create a namespace

last9in your kubernetes cluster. We will use this to setup the OpenTelemetry Collector agent.

Helm Chart Installation of OpenTelemetry Collector

Copy the Helm chart locally as last9-otel-collector-values.yaml

# Default values for opentelemetry-collector.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

nameOverride: "last9-otel-collector"

fullnameOverride: ""

# Valid values are "daemonset", "deployment", and "statefulset".

mode: "daemonset"

# Specify which namespace should be used to deploy the resources into

namespaceOverride: "last9"

# Handles basic configuration of components that

# also require k8s modifications to work correctly.

# .Values.config can be used to modify/add to a preset

# component configuration, but CANNOT be used to remove

# preset configuration. If you require removal of any

# sections of a preset configuration, you cannot use

# the preset. Instead, configure the component manually in

# .Values.config and use the other fields supplied in the

# values.yaml to configure k8s as necessary.

presets:

# Configures the collector to collect logs.

# Adds the filelog receiver to the logs pipeline

# and adds the necessary volumes and volume mounts.

# Best used with mode = daemonset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#filelog-receiver for details on the receiver.

logsCollection:

enabled: true

includeCollectorLogs: false

# Enabling this writes checkpoints in /var/lib/otelcol/ host directory.

# Note this changes collector's user to root, so that it can write to host directory.

storeCheckpoints: false

# The maximum bytes size of the recombined field.

# Once the size exceeds the limit, all received entries of the source will be combined and flushed.

maxRecombineLogSize: 102400

# Configures the collector to collect host metrics.

# Adds the hostmetrics receiver to the metrics pipeline

# and adds the necessary volumes and volume mounts.

# Best used with mode = daemonset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#host-metrics-receiver for details on the receiver.

hostMetrics:

enabled: true

# Configures the Kubernetes Processor to add Kubernetes metadata.

# Adds the k8sattributes processor to all the pipelines

# and adds the necessary rules to ClusteRole.

# Best used with mode = daemonset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#kubernetes-attributes-processor for details on the receiver.

kubernetesAttributes:

enabled: true

# When enabled the processor will extra all labels for an associated pod and add them as resource attributes.

# The label's exact name will be the key.

extractAllPodLabels: true

# When enabled the processor will extra all annotations for an associated pod and add them as resource attributes.

# The annotation's exact name will be the key.

extractAllPodAnnotations: false

# Configures the collector to collect node, pod, and container metrics from the API server on a kubelet..

# Adds the kubeletstats receiver to the metrics pipeline

# and adds the necessary rules to ClusteRole.

# Best used with mode = daemonset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#kubeletstats-receiver for details on the receiver.

kubeletMetrics:

enabled: true

# Configures the collector to collect kubernetes events.

# Adds the k8sobject receiver to the logs pipeline

# and collects kubernetes events by default.

# Best used with mode = deployment or statefulset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#kubernetes-objects-receiver for details on the receiver.

kubernetesEvents:

enabled: false

# Configures the Kubernetes Cluster Receiver to collect cluster-level metrics.

# Adds the k8s_cluster receiver to the metrics pipeline

# and adds the necessary rules to ClusteRole.

# Best used with mode = deployment or statefulset.

# See https://opentelemetry.io/docs/kubernetes/collector/components/#kubernetes-cluster-receiver for details on the receiver.

clusterMetrics:

enabled: false

configMap:

# Specifies whether a configMap should be created (true by default)

create: true

# Specifies an existing ConfigMap to be mounted to the pod

# The ConfigMap MUST include the collector configuration via a key named 'relay' or the collector will not start.

existingName: ""

# Base collector configuration.

config:

exporters:

# Use when you need to debug output

# debug:

# verbosity: detailed

# sampling_initial: 5

# sampling_thereafter: 200

otlp/last9:

endpoint: "<LAST9_OTLP_ENDPOINT>"

headers:

"Authorization": "Basic <LAST9_OTLP_AUTH_HEADER>"

extensions:

# The health_check extension is mandatory for this chart.

# Without the health_check extension the collector will fail the readiness and liveliness probes.

# The health_check extension can be modified, but should never be removed.

health_check:

endpoint: ${env:MY_POD_IP}:13133

processors:

transform/logs/last9:

error_mode: ignore

log_statements:

- context: resource

statements:

# set the service name as app label of the container

# - set(attributes["service.name"], attributes["<label_name>"]) where attributes["service.name"] == ""

# Additional resource attributes can be set as follows.

# - set(attributes["<region>"], value)

# - set(attributes["deployment_environment"], "staging")

batch:

send_batch_size: 50000

send_batch_max_size: 50000

timeout: 5s

# Default memory limiter configuration for the collector based on k8s resource limits.

# This configuration is best suited when you are only shipping logs via the Otel Collector.

# If you want to ship more telemerty signals, then consult with Last9 team to twaek the memory

# limiter settings.

memory_limiter/logs:

# check_interval is the time between measurements of memory usage.

check_interval: 5s

# By default limit_mib is set to 85% of ".Values.resources.limits.memory"

limit_percentage: 85

# By default spike_limit_mib is set to 15% of ".Values.resources.limits.memory"

spike_limit_percentage: 15

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

max_recv_msg_size_mib: 16

http:

endpoint: 0.0.0.0:4318

service:

extensions:

- health_check

pipelines:

logs:

receivers:

- otlp

- filelog

processors:

- memory_limiter/logs

- k8sattributes

- transform/logs/last9

- batch

exporters:

- otlp/last9

metrics:

receivers:

- otlp

- prometheus

- hostmetrics

- kubeletstats

exporters:

- otlp/last9

traces:

receivers:

- otlp

exporters:

- otlp/last9

image:

# If you want to use the core image `otel/opentelemetry-collector`, you also need to change `command.name` value to `otelcol`.

repository: "otel/opentelemetry-collector-contrib"

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "0.107.0"

# When digest is set to a non-empty value, images will be pulled by digest (regardless of tag value).

digest: ""

imagePullSecrets: []

# OpenTelemetry Collector executable

command:

name: ""

extraArgs: []

serviceAccount:

# Specifies whether a service account should be created

create: true

# Annotations to add to the service account

annotations: {}

# The name of the service account to use.

# If not set and create is true, a name is generated using the fullname template

name: ""

clusterRole:

# Specifies whether a clusterRole should be created

# Some presets also trigger the creation of a cluster role and cluster role binding.

# If using one of those presets, this field is no-op.

create: false

# Annotations to add to the clusterRole

# Can be used in combination with presets that create a cluster role.

annotations: {}

# The name of the clusterRole to use.

# If not set a name is generated using the fullname template

# Can be used in combination with presets that create a cluster role.

name: ""

# A set of rules as documented here : https://kubernetes.io/docs/reference/access-authn-authz/rbac/

# Can be used in combination with presets that create a cluster role to add additional rules.

rules: []

# - apiGroups:

# - ''

# resources:

# - 'pods'

# - 'nodes'

# verbs:

# - 'get'

# - 'list'

# - 'watch'

clusterRoleBinding:

# Annotations to add to the clusterRoleBinding

# Can be used in combination with presets that create a cluster role binding.

annotations: {}

# The name of the clusterRoleBinding to use.

# If not set a name is generated using the fullname template

# Can be used in combination with presets that create a cluster role binding.

name: ""

podSecurityContext: {}

securityContext: {}

nodeSelector: {}

tolerations: []

affinity: {}

topologySpreadConstraints: []

# Allows for pod scheduler prioritisation

priorityClassName: ""

extraEnvs: []

extraEnvsFrom: []

# This also supports template content, which will eventually be converted to yaml.

extraVolumes: []

# This also supports template content, which will eventually be converted to yaml.

extraVolumeMounts: []

# Configuration for ports

# nodePort is also allowed

ports:

otlp:

enabled: false

containerPort: 4317

servicePort: 4317

hostPort: 4317

protocol: TCP

# nodePort: 30317

appProtocol: grpc

otlp-http:

enabled: false

containerPort: 4318

servicePort: 4318

hostPort: 4318

protocol: TCP

metrics:

# The metrics port is disabled by default. However you need to enable the port

# in order to use the ServiceMonitor (serviceMonitor.enabled) or PodMonitor (podMonitor.enabled).

enabled: false

containerPort: 8888

servicePort: 8888

protocol: TCP

# When enabled, the chart will set the GOMEMLIMIT env var to 80% of the configured resources.limits.memory.

# If no resources.limits.memory are defined then enabling does nothing.

# It is HIGHLY recommend to enable this setting and set a value for resources.limits.memory.

useGOMEMLIMIT: true

# Resource limits & requests.

# It is HIGHLY recommended to set resource limits.

resources: {}

# resources:

# limits:

# cpu: 250m

# memory: 512Mi

podAnnotations: {}

podLabels: {}

# Common labels to add to all otel-collector resources. Evaluated as a template.

additionalLabels: {}

# app.kubernetes.io/part-of: my-app

# Host networking requested for this pod. Use the host's network namespace.

hostNetwork: false

# Adding entries to Pod /etc/hosts with HostAliases

# https://kubernetes.io/docs/tasks/network/customize-hosts-file-for-pods/

hostAliases: []

# - ip: "1.2.3.4"

# hostnames:

# - "my.host.com"

# Pod DNS policy ClusterFirst, ClusterFirstWithHostNet, None, Default, None

dnsPolicy: ""

# Custom DNS config. Required when DNS policy is None.

dnsConfig: {}

# only used with deployment mode

replicaCount: 1

# only used with deployment mode

revisionHistoryLimit: 10

annotations: {}

# List of extra sidecars to add.

# This also supports template content, which will eventually be converted to yaml.

extraContainers: []

# extraContainers:

# - name: test

# command:

# - cp

# args:

# - /bin/sleep

# - /test/sleep

# image: busybox:latest

# volumeMounts:

# - name: test

# mountPath: /test

# List of init container specs, e.g. for copying a binary to be executed as a lifecycle hook.

# This also supports template content, which will eventually be converted to yaml.

# Another usage of init containers is e.g. initializing filesystem permissions to the OTLP Collector user `10001` in case you are using persistence and the volume is producing a permission denied error for the OTLP Collector container.

initContainers: []

# initContainers:

# - name: test

# image: busybox:latest

# command:

# - cp

# args:

# - /bin/sleep

# - /test/sleep

# volumeMounts:

# - name: test

# mountPath: /test

# - name: init-fs

# image: busybox:latest

# command:

# - sh

# - '-c'

# - 'chown -R 10001: /var/lib/storage/otc' # use the path given as per `extensions.file_storage.directory` & `extraVolumeMounts[x].mountPath`

# volumeMounts:

# - name: opentelemetry-collector-data # use the name of the volume used for persistence

# mountPath: /var/lib/storage/otc # use the path given as per `extensions.file_storage.directory` & `extraVolumeMounts[x].mountPath`

# Pod lifecycle policies.

lifecycleHooks: {}

# lifecycleHooks:

# preStop:

# exec:

# command:

# - /test/sleep

# - "5"

# liveness probe configuration

# Ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

##

livenessProbe:

# Number of seconds after the container has started before startup, liveness or readiness probes are initiated.

# initialDelaySeconds: 1

# How often in seconds to perform the probe.

# periodSeconds: 10

# Number of seconds after which the probe times out.

# timeoutSeconds: 1

# Minimum consecutive failures for the probe to be considered failed after having succeeded.

# failureThreshold: 1

# Duration in seconds the pod needs to terminate gracefully upon probe failure.

# terminationGracePeriodSeconds: 10

httpGet:

port: 13133

path: /

# readiness probe configuration

# Ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

##

readinessProbe:

# Number of seconds after the container has started before startup, liveness or readiness probes are initiated.

# initialDelaySeconds: 1

# How often (in seconds) to perform the probe.

# periodSeconds: 10

# Number of seconds after which the probe times out.

# timeoutSeconds: 1

# Minimum consecutive successes for the probe to be considered successful after having failed.

# successThreshold: 1

# Minimum consecutive failures for the probe to be considered failed after having succeeded.

# failureThreshold: 1

httpGet:

port: 13133

path: /

service:

# Enable the creation of a Service.

# By default, it's enabled on mode != daemonset.

# However, to enable it on mode = daemonset, its creation must be explicitly enabled

# enabled: true

type: ClusterIP

# type: LoadBalancer

# loadBalancerIP: 1.2.3.4

# loadBalancerSourceRanges: []

# By default, Service of type 'LoadBalancer' will be created setting 'externalTrafficPolicy: Cluster'

# unless other value is explicitly set.

# Possible values are Cluster or Local (https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip)

# externalTrafficPolicy: Cluster

annotations: {}

# By default, Service will be created setting 'internalTrafficPolicy: Local' on mode = daemonset

# unless other value is explicitly set.

# Setting 'internalTrafficPolicy: Cluster' on a daemonset is not recommended

# internalTrafficPolicy: Cluster

ingress:

enabled: false

# annotations: {}

# ingressClassName: nginx

# hosts:

# - host: collector.example.com

# paths:

# - path: /

# pathType: Prefix

# port: 4318

# tls:

# - secretName: collector-tls

# hosts:

# - collector.example.com

# Additional ingresses - only created if ingress.enabled is true

# Useful for when differently annotated ingress services are required

# Each additional ingress needs key "name" set to something unique

additionalIngresses: []

# - name: cloudwatch

# ingressClassName: nginx

# annotations: {}

# hosts:

# - host: collector.example.com

# paths:

# - path: /

# pathType: Prefix

# port: 4318

# tls:

# - secretName: collector-tls

# hosts:

# - collector.example.com

podMonitor:

# The pod monitor by default scrapes the metrics port.

# The metrics port needs to be enabled as well.

enabled: false

metricsEndpoints:

- port: metrics

# interval: 15s

# additional labels for the PodMonitor

extraLabels: {}

# release: kube-prometheus-stack

serviceMonitor:

# The service monitor by default scrapes the metrics port.

# The metrics port needs to be enabled as well.

enabled: false

metricsEndpoints:

- port: metrics

# interval: 15s

# additional labels for the ServiceMonitor

extraLabels: {}

# release: kube-prometheus-stack

# Used to set relabeling and metricRelabeling configs on the ServiceMonitor

# https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config

relabelings: []

metricRelabelings: []

# PodDisruptionBudget is used only if deployment enabled

podDisruptionBudget:

enabled: false

# minAvailable: 2

# maxUnavailable: 1

# autoscaling is used only if mode is "deployment" or "statefulset"

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 10

behavior: {}

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

rollout:

rollingUpdate: {}

# When 'mode: daemonset', maxSurge cannot be used when hostPort is set for any of the ports

# maxSurge: 25%

# maxUnavailable: 0

strategy: RollingUpdate

prometheusRule:

enabled: false

groups: []

# Create default rules for monitoring the collector

defaultRules:

enabled: false

# additional labels for the PrometheusRule

extraLabels: {}

statefulset:

# volumeClaimTemplates for a statefulset

volumeClaimTemplates: []

podManagementPolicy: "Parallel"

# Controls if and how PVCs created by the StatefulSet are deleted. Available in Kubernetes 1.23+.

persistentVolumeClaimRetentionPolicy:

enabled: false

whenDeleted: Retain

whenScaled: Retain

networkPolicy:

enabled: false

# Annotations to add to the NetworkPolicy

annotations: {}

# Configure the 'from' clause of the NetworkPolicy.

# By default this will restrict traffic to ports enabled for the Collector. If

# you wish to further restrict traffic to other hosts or specific namespaces,

# see the standard NetworkPolicy 'spec.ingress.from' definition for more info:

# https://kubernetes.io/docs/reference/kubernetes-api/policy-resources/network-policy-v1/

allowIngressFrom: []

# # Allow traffic from any pod in any namespace, but not external hosts

# - namespaceSelector: {}

# # Allow external access from a specific cidr block

# - ipBlock:

# cidr: 192.168.1.64/32

# # Allow access from pods in specific namespaces

# - namespaceSelector:

# matchExpressions:

# - key: kubernetes.io/metadata.name

# operator: In

# values:

# - "cats"

# - "dogs"

# Add additional ingress rules to specific ports

# Useful to allow external hosts/services to access specific ports

# An example is allowing an external prometheus server to scrape metrics

#

# See the standard NetworkPolicy 'spec.ingress' definition for more info:

# https://kubernetes.io/docs/reference/kubernetes-api/policy-resources/network-policy-v1/

extraIngressRules: []

# - ports:

# - port: metrics

# protocol: TCP

# from:

# - ipBlock:

# cidr: 192.168.1.64/32

# Restrict egress traffic from the OpenTelemetry collector pod

# See the standard NetworkPolicy 'spec.egress' definition for more info:

# https://kubernetes.io/docs/reference/kubernetes-api/policy-resources/network-policy-v1/

egressRules: []

# - to:

# - namespaceSelector: {}

# - ipBlock:

# cidr: 192.168.10.10/24

# ports:

# - port: 1234

# protocol: TCP

# Allow containers to share processes across pod namespace

shareProcessNamespace: false

What does this Helm chart do?

This helm chart installs OpenTelemetry Collector in agent mode in each and every Kubernetes node in your cluster using the dameonset mode.

It configures the logging pipeline to send logs from all containers for the node to Last9.

It also sends health metrics from the Otel Collector to Last9 so that Last9 can monitor the ingestion pipeline as well.

Setting additional log attributes

You can update the additional labels by configuring the transform processor.

transform/logs/last9:

error_mode: ignore

log_statements:

- context: resource

statements:

- set(attributes["service.name"], attributes["k8s.container.name"]) where attributes["service.name"] == ""

# Additional resource attributes can be set as follows.

# Use additional resource attributes to differentiate between clusters and deployments.

# set(attributes["<key>"], value)

- set(attributes["deployment_environment"], "staging")

Note that, do not remove the service.name transformation as it is needed for accelerated queries to kick in on Last9.

Installation

Make sure that helm is installed and up-to-date.

helm repo update

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm upgrade --install last9-opentelemetry-collector open-telemetry/opentelemetry-collector -f last9-otel-collector-values.yaml

It is highly recommended to use a dedicated namespace for the OpenTelemetry Collector and checkin the values.yaml file in your version control setup.

Verification

Verify that the helm chart is installed correctly. If all goes well, you should see a message along the following lines.

Release "last9-opentelemetry-collector" has been upgraded. Happy Helming!

NAME: last9-opentelemetry-collector

LAST DEPLOYED: Mon Aug 19 13:24:09 2024

NAMESPACE: default

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

[WARNING] No resource limits or requests were set. Consider setter resource requests and limits for your collector(s) via the `resources` field.

[WARNING] "useGOMEMLIMIT" is enabled but memory limits have not been supplied so the GOMEMLIMIT env var could not be added. Solve this problem by setting resources.limits.memory or disabling useGOMEMLIMIT

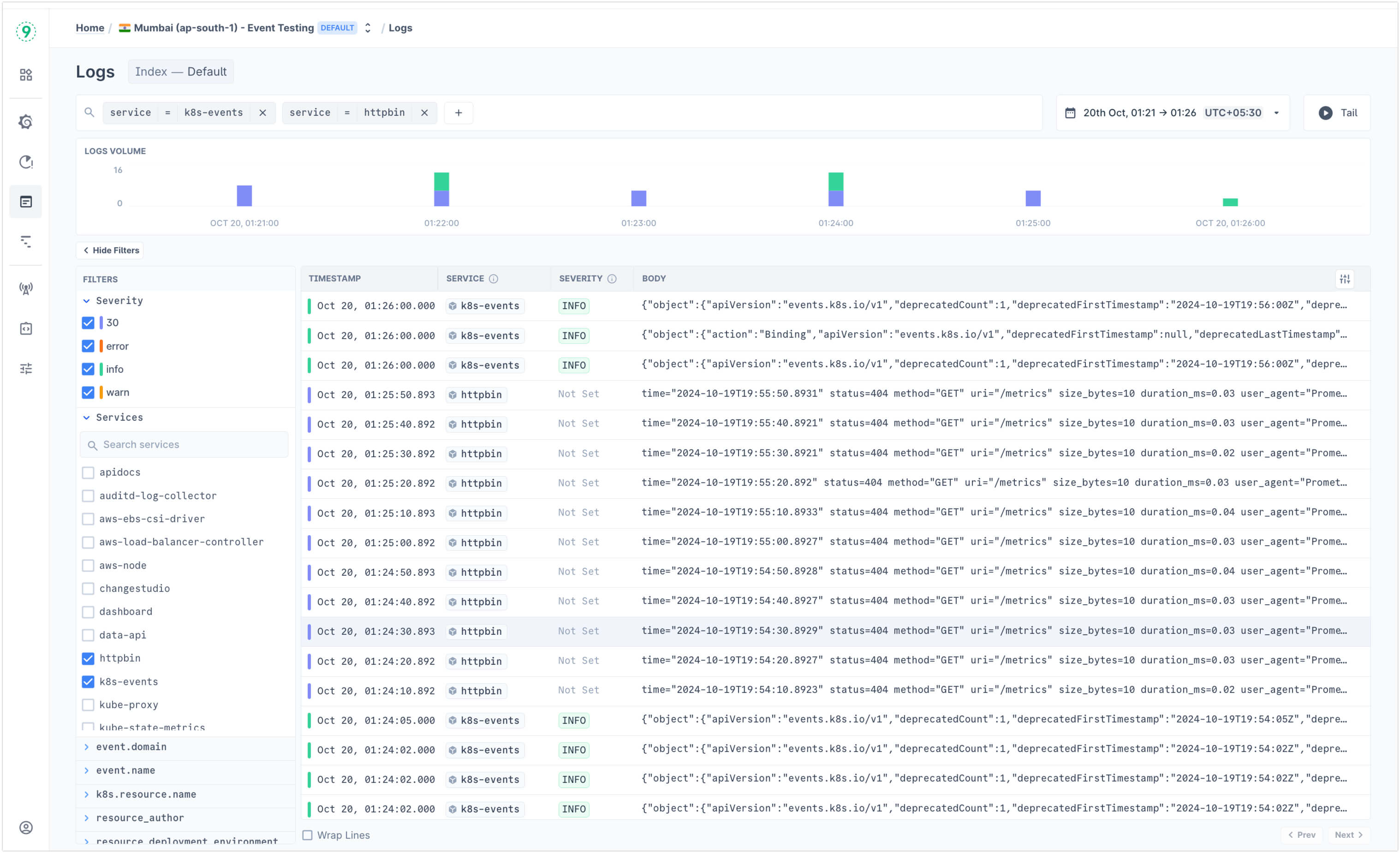

You can also visit the Logs Dashboard to see the data in action.

Advanced settings

You can configure resource limits for the memory and CPU in the resources section as needed. By default it is not set.

resources: {}

Verification

Login to Last9 and visit the Logs panel.

Troubleshooting

Please get in touch with us on Discord or Email if you have any questions.