Ingestion

Control Plane tools to configure for how your data is ingested into Last9.

Ingestion is the second pillar of our telemetry data platform, Last9. Once you‘ve instrumented your system, controls over how your telemetry data flows in to Last9 do a fair bit of heavy lifting.

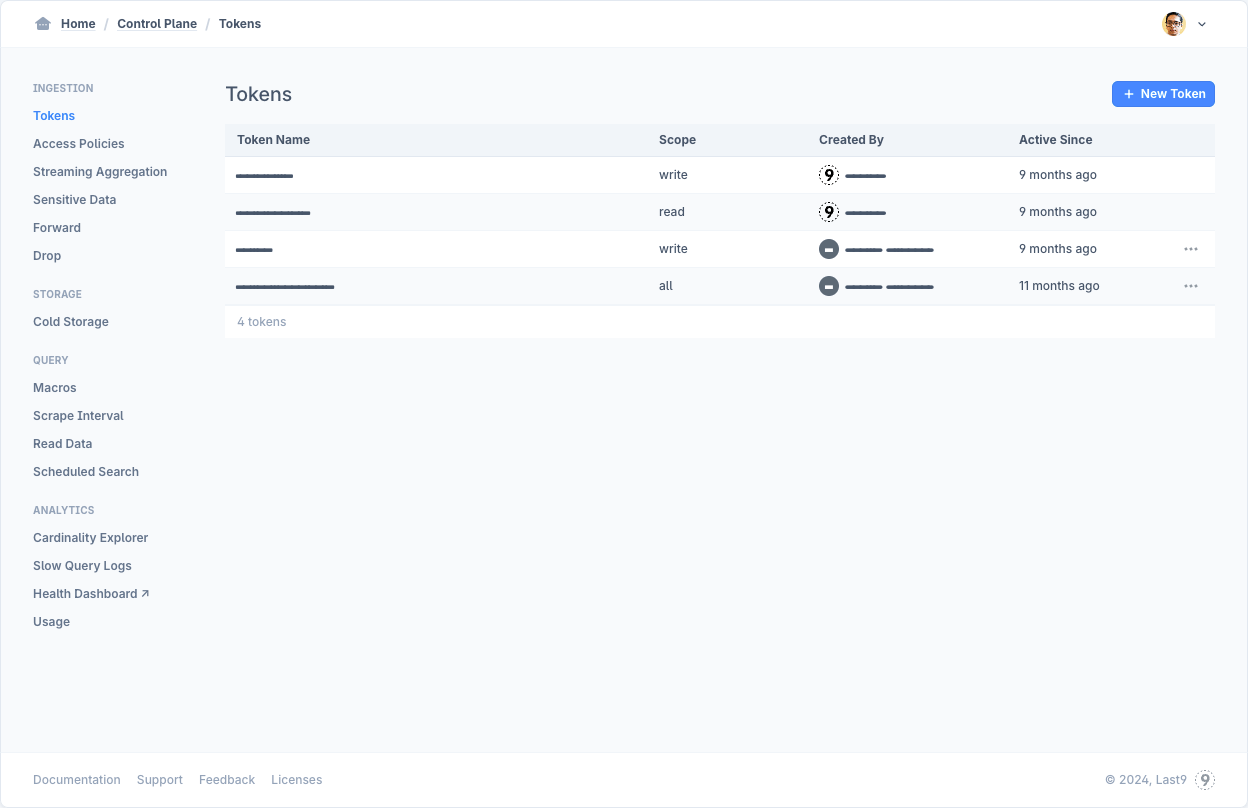

Tokens

Use tokens to scope access along with Access Policies.

A token each for write and read access are created by the system when you signup — the write token is used in the setup wizard while helping you configure sending data to Last9, and the read token is for setting up dashboard templates, including the Health Dashboard. System generated tokens cannot be deleted.

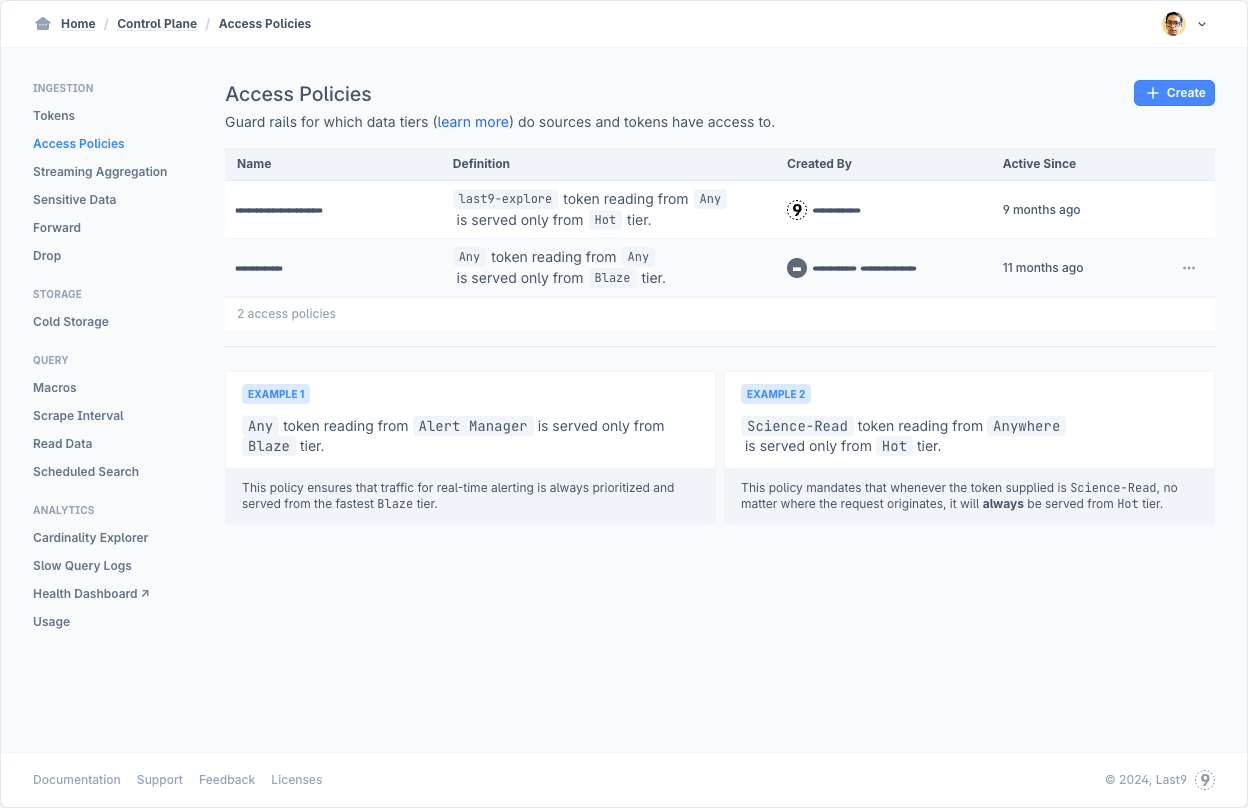

Access Policies

Setup how various clients access your data — depending on the client type and token used, you can control from which tier (blaze, hot, cold) your data is queried. We recommend alerting workloads to always use the Blaze Tier and reporting workfloads to use the Cold Tier. Read more on how to configure these policies.

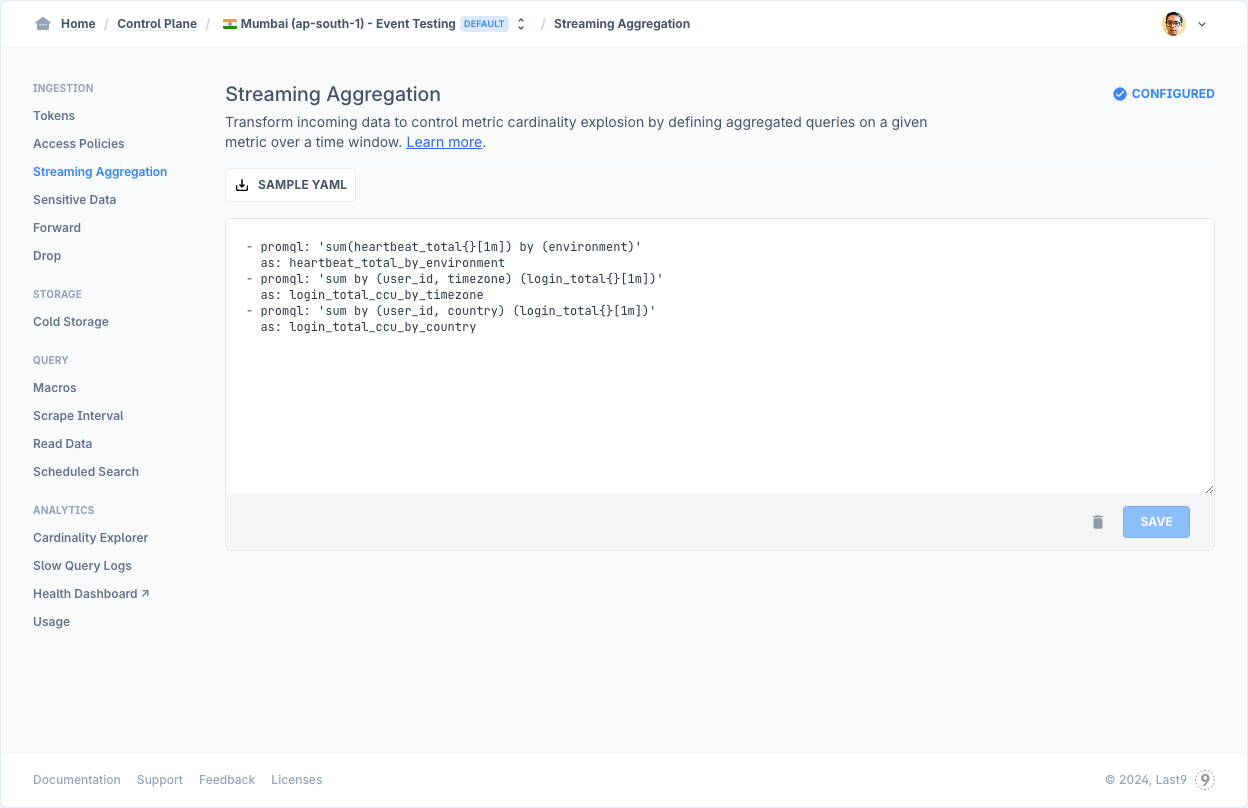

Streaming Aggregations

Streaming Aggregations allow you to transform data in real-time at the ingestion layer before it is stored in Last9. They enable you to generate scoped metrics on runtime without any instrumentation changes and improve performance of your read queries by controlling cardinality of the new metrics. Read more on how to configure these aggregations.

The following configurations are applied in the same sequence as presented. Your telemetry data is first scanned for sensitive data, then matching data is forwarded, and then any matching data is dropped. These configurations allow you to not make any instrumentation level changes and give you a more run-time control.

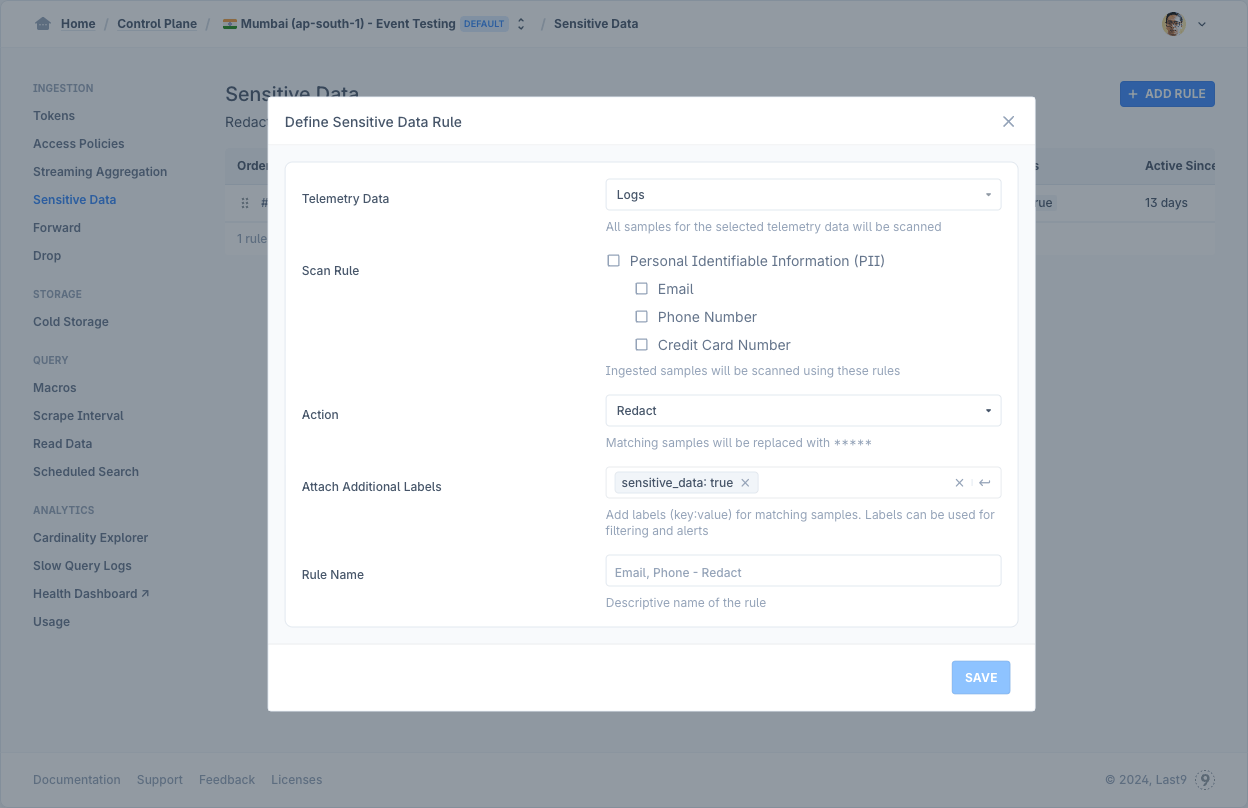

Sensitive Data

Redact sensitive data from your telemetry data at time of ingestion. Currently supported:

- Telemetry Type: Logs

- Actions: Redact (default), No Action

Last9 provides built-in scan rules for PII like emails, phone numbers, and credit card numbers. While the default action is to redact, you can also choose to take no action. This is particularly helpful when you just want to attach additional labels to the telemetry.

Configured rules are applied in a sequential order. Once saved, you can drag-and-drop to reorder the rules.

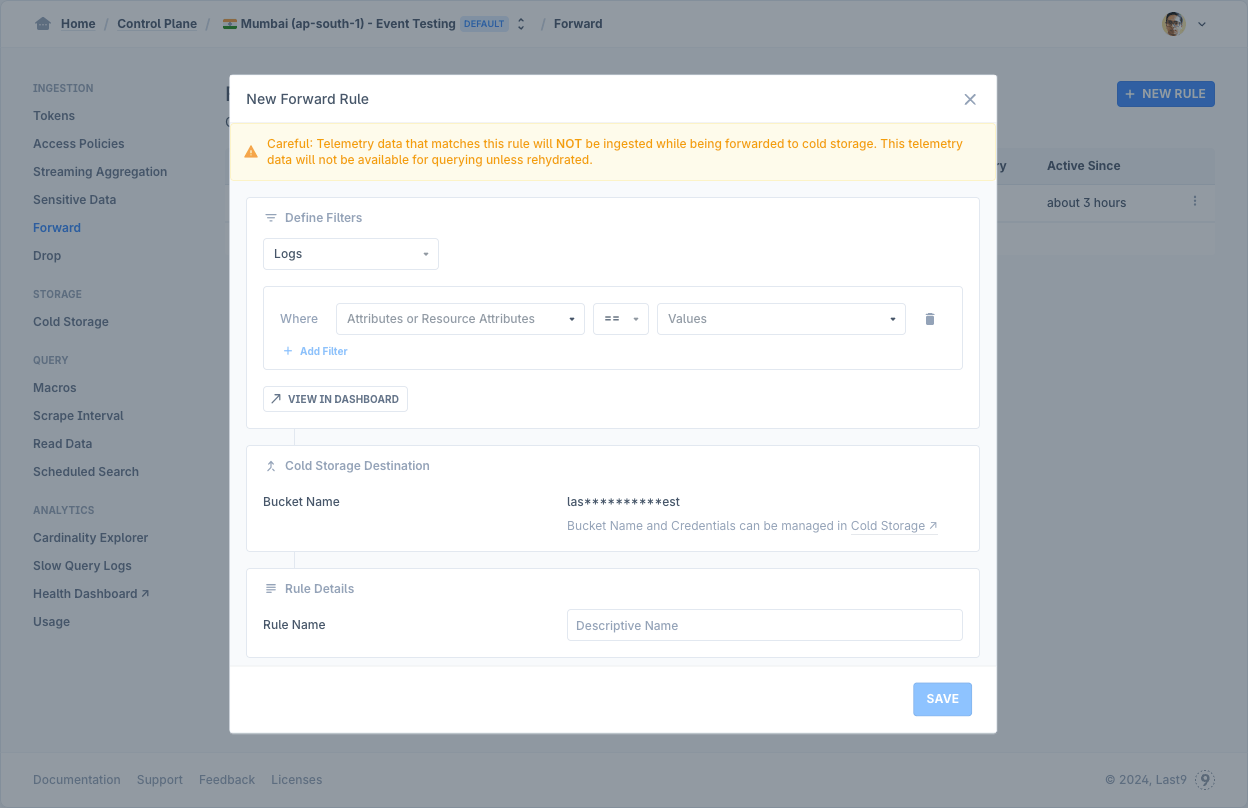

Forward

While data after applicable retention periods can be moved to your configured S3 bucket for Cold Storage, you can also configure rules with == and != matching filters to forward incoming data directly to your cold storage without being ingested and stored. To verify the filters before saving the rule, you can click on "View in Dashboard".

Do note, this data will not be available for querying when forwarded, but once rehydrated, it can be queried.

Supported telemetry types: Logs and Traces.

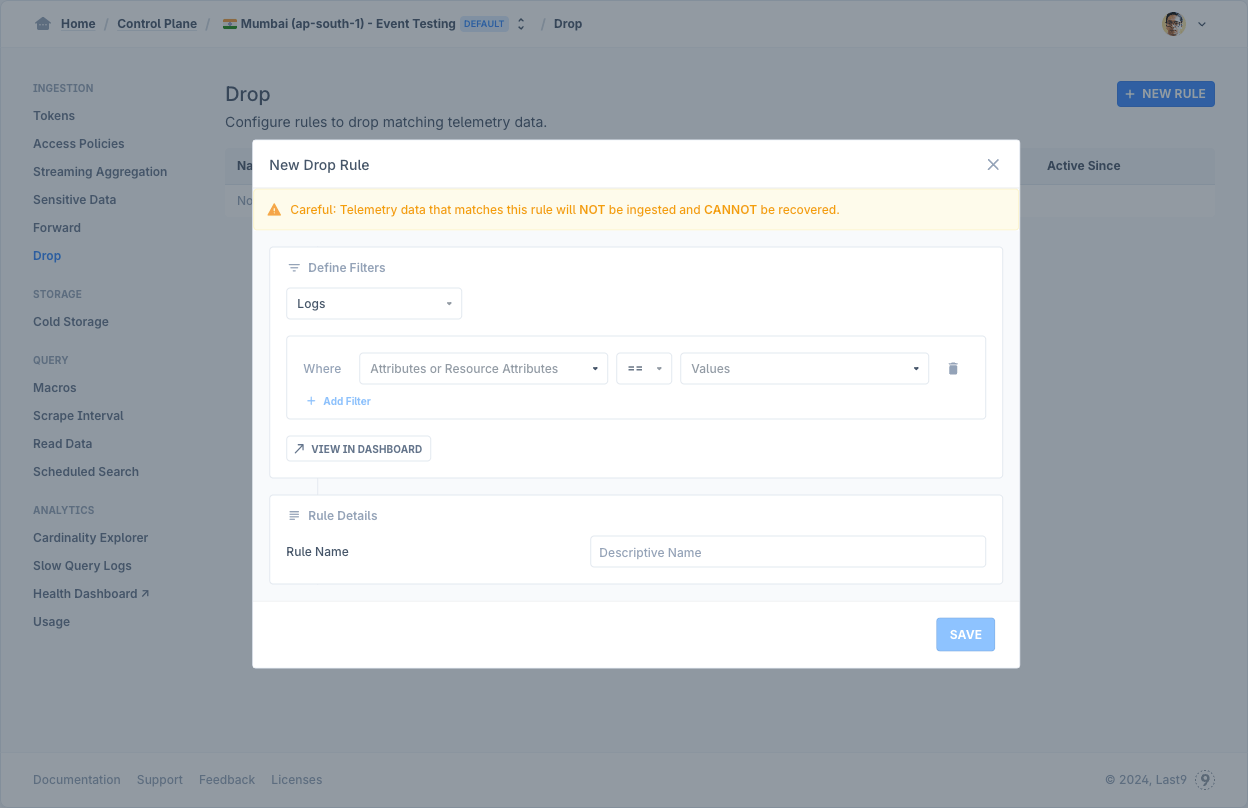

Drop

You can configure rules with == and != matching filters to drop incoming data. Do note, this data is not ingested and cannot be recovered as well. To verify the filters before saving the rule, you can click on "View in Dashboard".

Supported telemetry types: Logs, Metrics and Traces.

Troubleshooting

Please get in touch with us on Discord or Email if you have any questions.